Linux Spin Lock Limitation proof

by yy813 (CID 00848280)

This is a simple proof to some limitations to Linux Spin Lock mechanism. Please reference this post: https://yymath.github.io/2018/01/07/linux_spin_lock/. All rights reserved.

Step 1

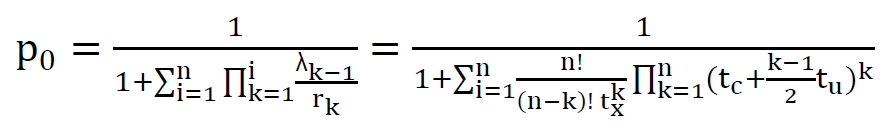

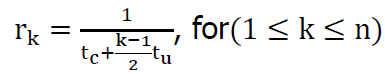

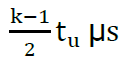

When k cores are either using or queued on, the lock, corresponding arrival rate is:

Step 2

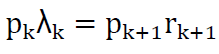

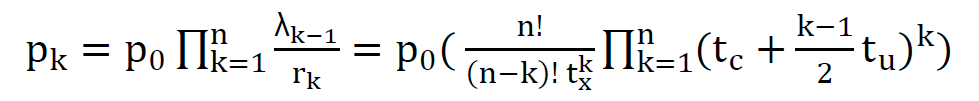

Transition from state k is equal to the transition into the state k when the system is at the equilibrium. Then the general relationship (closed-form) between state k and state k+1 is:

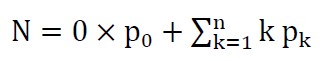

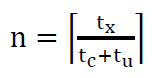

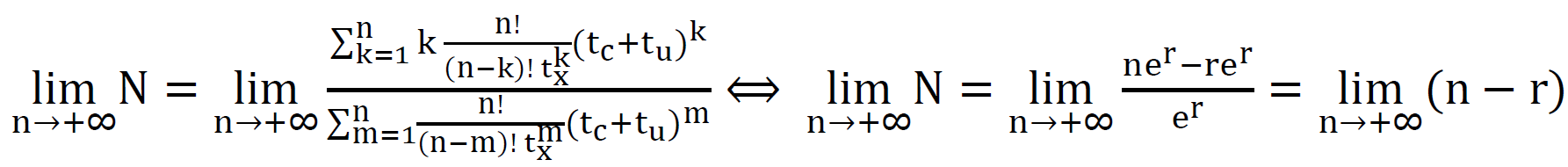

Total population N (the mean number of cores either using or waiting for the lock) is:

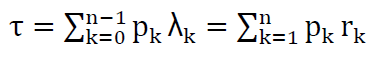

By definition, throughput is the product of completion rate multiplied by the probability of each state, which is also equal to the product of the arrival rate multiplied by corresponding probabilities:

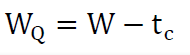

According to the Little’s Law:

Step 3

From the coursework specification, “speed-up” can be expressed by: Speedup = n − N

Figure 1.1 and Figure 1.2 show the queuing time and speed-up against number of cores with different t𝑥.

Step 4

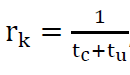

For an ideal ticket spinlock, completion rate becomes:

Step 5

Collapse in the speed-up was observed in Figures 1.2 due to the average number of either executing or waiting cores (N) has been increasing faster than number of cores (n) itself. The main reason is the cache consistency which is assumed to be the bottle-neck of the spinlock tickets model. This is due to the fact that time to update every locally-cached copy of shared memory takes

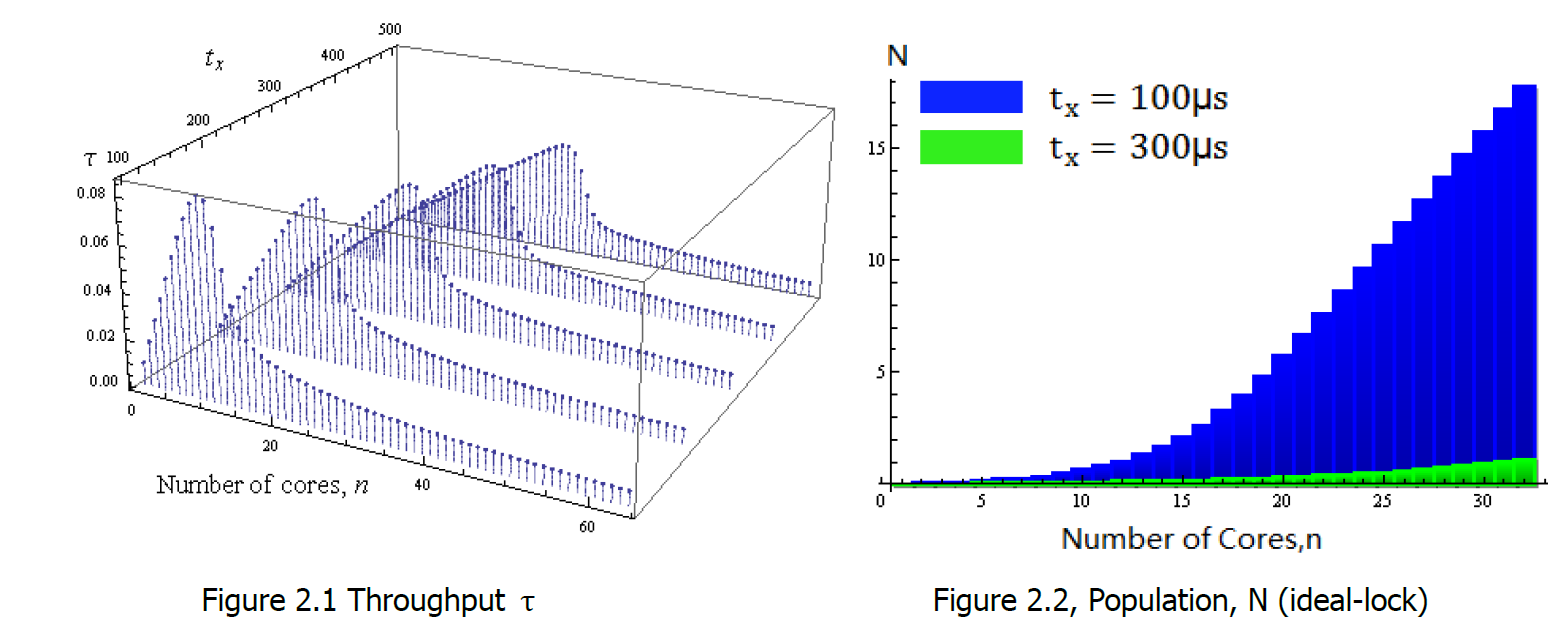

In Figure 1.1, at t𝑥=300 μs, a small hump can be observed. This hump actually corresponds to the speed-up collapse. Figure 2.1 demonstrates five throughput plots according to t𝑥 values of 100, 200, 300, 400 and 500μs. It can be clearly seen that the exact number of cores corresponding to the following collapse becomes higher as t𝑥 increases.

As it can be seen in Figure 1.2 and Figure 1.3 whether ideal spinlock gives linear speed-up or not, clearly depends on the number of cores and parameters t𝑥, tc, tu. However, it is not a “perfectly” linear speed-up at any point. As it is demonstrated on the Figure 2.2, the population N is not constant, therefore the speed-up n-N apparently is not “perfectly” linear as n. Obviously, after certain point, increase in average number of cores either waiting or executing (N) becomes equal to increase in number of cores itself (n). At this point previously linear speed up hits the presumably never-ending plateau. According to approximation done as a part of this coursework the plateau occurs after number of cores reaches:

In the model the tc – the average time spent in the critical section should depend on number of cores waiting for the lock. This due to the fact, that cores actually cache not only 2 bytes of the spinlock but the whole cache line which is 64 bytes for Intel Core i3-i7. The owner core needs an exclusive access to the cache line in order to write a value to the surrounding data. However, the other cores constantly acquiring a shared access to the spinlock cache line will prevent the owning core from acquiring abovementioned exclusive access. This will result in cache miss for every write operation performed by owning core to the spinlock cache line. The throughput can be reduced by a factor of two for k=1 and by a factor of 10 for k>1 (Corbet, J. 2013) [1].

Also, another model is that one core can be reserved as a dispatcher, passing inter-core messages and managing queues. However, the bigger gets number of managed critical sections the more complicated and “slow” gets the model due to the very limited cache size.

[1] Jonathan Corbet, (2013), “Improving ticket spinlocks”. Available at: http://lwn.net/Articles/531254/. Retrieved on 2013.10.29